Tips before migrating to a newer R version

This post is based on real events.

Several times when I installed the latest version of R, and proceeded to install all the packages I had in the previous version, I encountered problems. It also applies when updating packages after a while.

I decided to make this post after seeing the community reception to a quick post I made:

This post -also available in Spanish here- does not want to discourage the installation of R, on the contrary, to warn the "dark side" of the migration and make our projects stable over time.

Luckily the functions change for the better, or even much better as it is the case of the tidyverse suite.

🗞 (A little announcement for those who speak Spanish 🇪🇸) 3-weeks ago I create the data school: EscuelaDeDatosVivos.AI, where you can find an introductory free R course for data science (which includes the tidyverse and funModeling among others) 👉 Desembarcando en R

Projects that are not frequently executed

For example, post migration in the run to generate the Data Science Live Book (written 100% in R markdown), I have seen function depreciation messages as a warning. Naturally I have to remove them or use the new function.

I also had the case where they changed some of the parameters of R Markdown.

Another case 🎬

Imagine the following flow: R 4.0.0 is installed, then the latest version of all packages. Taking ggplot as an example, we go from, 2.8.1 to 3.5.1.

Version 3.5.1 doesn't have a function because it is deprecated, ergo it fails. Or even changed a function (example from tidyverse: mutate_at, mutate_if). It changes what is called the signature of the function, e.g. the .vars parameter.

Package installation

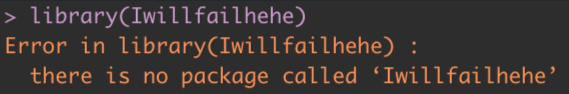

Well, if we migrate and don't install everything we had before, we're going to run an old script and have this problem.

Some recommend listing all the packages we have installed, and generating a script to install them.

Another solution is to manually copy the packages from a folder of the old version of R to the new one. The packages are folders within the installation of R.

R on servers

Another case, they have R installed on a server with processes running every day, they do the migration and some of the functions change their signature. That is, they change the type of data that perhaps is defined in a function.

This point should not occur often if one migrates from package versions often. The normal flow for removing a function from an R package is to first announce a with a warning the famous deprecated: Mark a function as deprecated in customised R package.

If the announcement is in an N+1 version, and we switch from N to the N+2 version, we may miss the message and the function is no longer used.

So it is not advisable to upgrade packages and R?

As I said at the beginning, of course I encourage the migration.

We must be alert and test the projects we already have running.

Otherwise, we wouldn't have many of the facilities that today's languages give us through the use of the community. It is not even dependent on R.

📝 Now that the tidymodels is out, here's another post that might interest you: How to use recipes package from tidymodels for one hot encoding 🛠

Some advice: Environments

Python has a very useful concept that is the virtual environment, it is created quickly, and what it causes is that each library installation is done in the project folder.

Then you do pip freeze > requirements.txt and all the libraries with their version remain in a txt with which they can quickly recreate the environment with which they developed. Why and How to make a Requirements.txt

This is not so easy in R, there is packrat but it has its complexities, for example if there are repos in github.

Augusto Hassel just told me about the renv library (also from RStudio! 👏). I quote the page:

"The renv package is a new effort to bring project-local R dependency management to your projects. The goal is for renv to be a robust, stable replacement for the Packrat package, with fewer surprises and better default behaviors."

You can see the slides from renv: Project Environments for R, by Kevin Ushey.

Docker

Augusto also told me about Docker as a solution:

"Using Docker we can encapsulate the environment needed to run our code through an instruction file called Dockerfile. This way, we'll always be running the same image, wherever we pick up the environment."

Here's a post by him (in Spanish): My First Docker Repository

Conclusions

✅ If you have R in production, have a testing environment and a production environment.

✅ Install R, your libraries, and then check that everything is running as usual.

✅ Have unit test to automatically test that the data flow is not broken. In R check: testthat.

✅ Update all libraries every X months, don't let too much time go by.

As a moral, this is also being data scientist, solving version, installation and environment problems.

Moss! What did you think of the post?

Happy update!